This week’s SaaS Barometer Newsletter is brought to you by Drivetrain who helps you connect your plans to performance, with real-time answers, so you’re always one step ahead. Built for CFOs who want to lead with insight.

Drivetrain is an AI-native business planning platform that helps finance teams budget smarter, forecast faster, and report with confidence. Click here to see Drivetrain in action.

🔎 TL;DR

What’s the difference between SaaS and AI-Native solutions?

SaaS redefined the software business and delivery over the last two decades. AI-Native software is reshaping how software works and how humans interact with it.

Cloud infrastructure and large language models are foundational to both SaaS and AI-Native software. This week’s SaaS Barometer breaks down the modern software, the different components, who plays where, and how it’s interconnected.

Why the Modern Software Model Matters

In 2025, nearly every software company claims to be AI-powered. But where a software company fits in the modern software model reveals a lot about its competitive moat, cost structure, margins, product differentiation and customer value delivered.

We break the software ecosystem into five categories that matter - from the cloud infrastructure, to large language models and the applications shaping how humans work.

But first, let’s answer a question I often get when we publish benchmarks that differentiate SaaS solutions from AI-Native solutions which is “what is the difference between SaaS and AI-Native solutions?”.

SaaS (Software as a Service)

Definition:

SaaS refers to cloud-delivered software accessed via the internet, built to provide specific functionality to end users. Examples include CRM, accounting, collaboration, design and marketing automation software. Users interact through a browser or app, without needing to manage hardware, infrastructure or software installations.

Core Characteristics:

Hosted and managed by the vendor

Delivered via web or mobile interfaces

Licensed via subscription or usage-based pricing

Built for efficiency, scalability, and usability

May incorporate AI—but AI is not core to the product’s value

Primary Value Proposition:

SaaS enables businesses and individuals to complete tasks more efficiently, without needing capital investment in software, IT infrastructure, or ongoing maintenance.

AI-Native Products

Definition:

AI-Native products are built from the ground up with Generative AI or Machine/Deep Learning AI at their core. These products don’t just use AI, they are purpose-built to leverage the power of AI.

A simplified definition of AI is:

AI is the simulation of human intelligence by machines, especially computer systems, that are designed to perform tasks typically requiring human cognition—such as learning, reasoning, problem-solving, perception, and language understanding.

Core Characteristics: AI-Native Software

AI (LLMs, SLMs, NLP, ML, etc.) is essential to functionality

Uses techniques like generative AI, machine learning, and deep learning

Requires infrastructure for inference and/or fine-tuning

Often includes RAG, human-in-the-loop, or continuous learning

Cannot function without AI at the core

Primary Value Proposition:

Delivers a "thinking" or generative experience that mimics or enhances human cognitive and creative capabilities. Often, the human initiates or reinforces—but doesn’t drive—the action.

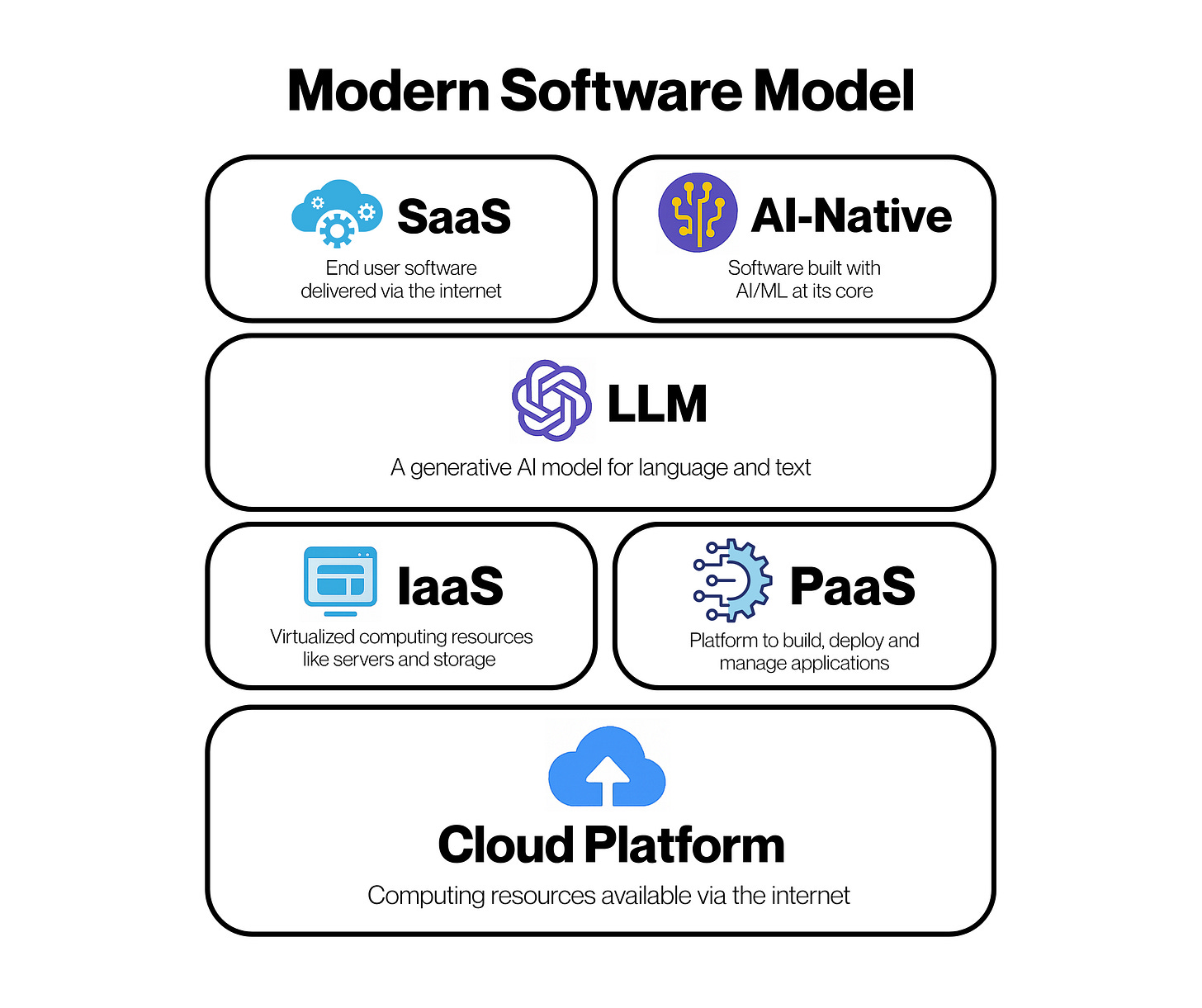

The Five Layers of the Modern Software Model

SaaS vs. AI-Native is only part of the story. Both rely on foundational cloud infrastructure. That’s why we define five categories of the modern “cloud” software model.

Many use "SaaS" and "Cloud" interchangeably—but they refer to different (yet overlapping) concepts. With the rise of AI-Native products, we now face three distinct but interconnected categories: Cloud, SaaS, and AI-Native.

Here’s the structure we use at Benchmarkit to track benchmarks across today’s software categories:

1️⃣ Cloud Platforms

The foundational compute, storage, and networking infrastructure upon which most SaaS, AI-Native, and LLM solutions run.

Examples:

Amazon Web Services (AWS)

Microsoft Azure

Google Cloud Platform (GCP)

Oracle Cloud

IBM Cloud

2️⃣ IaaS / PaaS (Infrastructure / Platform as a Service)

Where developers and platforms build, host, and scale applications and databases. While often lumped into "cloud products," these are distinct sublayers.

IaaS Examples:

AWS EC2

Azure VMs

Google Compute Engine

Oracle Bare Metal

IBM Cloud VMs

PaaS Examples:

MongoDB

Heroku

Firebase

Databricks

Snowflake

Bonus for technical readers: Kubernetes services (GKE, EKS, AKS) often act as the orchestration bridge between IaaS and PaaS.

3️⃣ LLM Providers

LLMs are a new modern software infrastructure layer—companies now build atop them like they once did cloud platforms.

Examples:

OpenAI (GPT-4, GPT-4o) – Azure

Anthropic (Claude) – AWS

Mistral – open-source, AWS/self-hosted

Cohere – multi-cloud, Oracle partnership

Google DeepMind (Gemini) – GCP

4️⃣ SaaS Applications

Traditional software, built for end users. Increasingly infused with AI, but still structured like classic applications.

Examples:

Salesforce (CRM)

Notion (productivity)

Figma (collaboration)

Zoom (communications)

Atlassian (developer tools)

5️⃣ AI-Native Applications

The next frontier. These apps are AI—not just powered by it. A key test is to ask is “if you removed the AI from the product, would the product still work”?

Examples:

Jasper – content generation

Gong – sales intelligence

Perplexity – AI-powered search (RAG)

Synthesia – avatar-based video

Runway – AI video/image editing

Why the Modern Software Model Matters

Cloud platforms and LLM providers are now the infrastructure titans.

Their dominance stems from the capital intensity, technical complexity, and scale required to power the AI era.

The Coming AI Compute Surge

AI compute demand is expected to grow 10x to 1000x over the next decade. Here’s what some of the experts are predicting:

Here’s what the experts say:

Sam Altman (OpenAI CEO): Compute requirements will grow exponentially, not linearly

Epoch AI: Training compute may increase 10,000x to 1 millionx by 2030 (vs. GPT-3 era, 2020 baseline)

Inference demand (real-time model use) is expected to surpass training demand by 2026–2027—driven by:

Enterprise SaaS integration

Real-time personal agents

Autonomous systems (e.g., robotics, vehicles)

If unoptimized, AI could consume 10–20% of global electricity by 2030.

Final Takeaways

✅ SaaS ≠ AI-Native: Bolting on AI doesn't make a product AI-Native. True AI-Native products are built on AI as the core foundation

✅ Infrastructure matters: neither SaaS nor AI-Native apps exist without Cloud Platforms, IaaS, PaaS, and LLM providers

✅ Cloud & LLM providers are the new infrastructure kings

✅ SaaS is evolving, embedding AI—but remains structured around traditional software models

✅ AI-Native is the next generation of SaaS: From content creation to sales, it’s redefining product design and user experience

💡 Operators and investors must understand where a product sits in the modern software model as it defines margin potential, value delivered, pricing models, GTM strategy, and long-term defensibility

🤔 The next edition of the SaaS Barometer newsletter will discuss the operational, organizational and financial differences between legacy SaaS and AI-Native software companies!